The Transformative Power of Failure

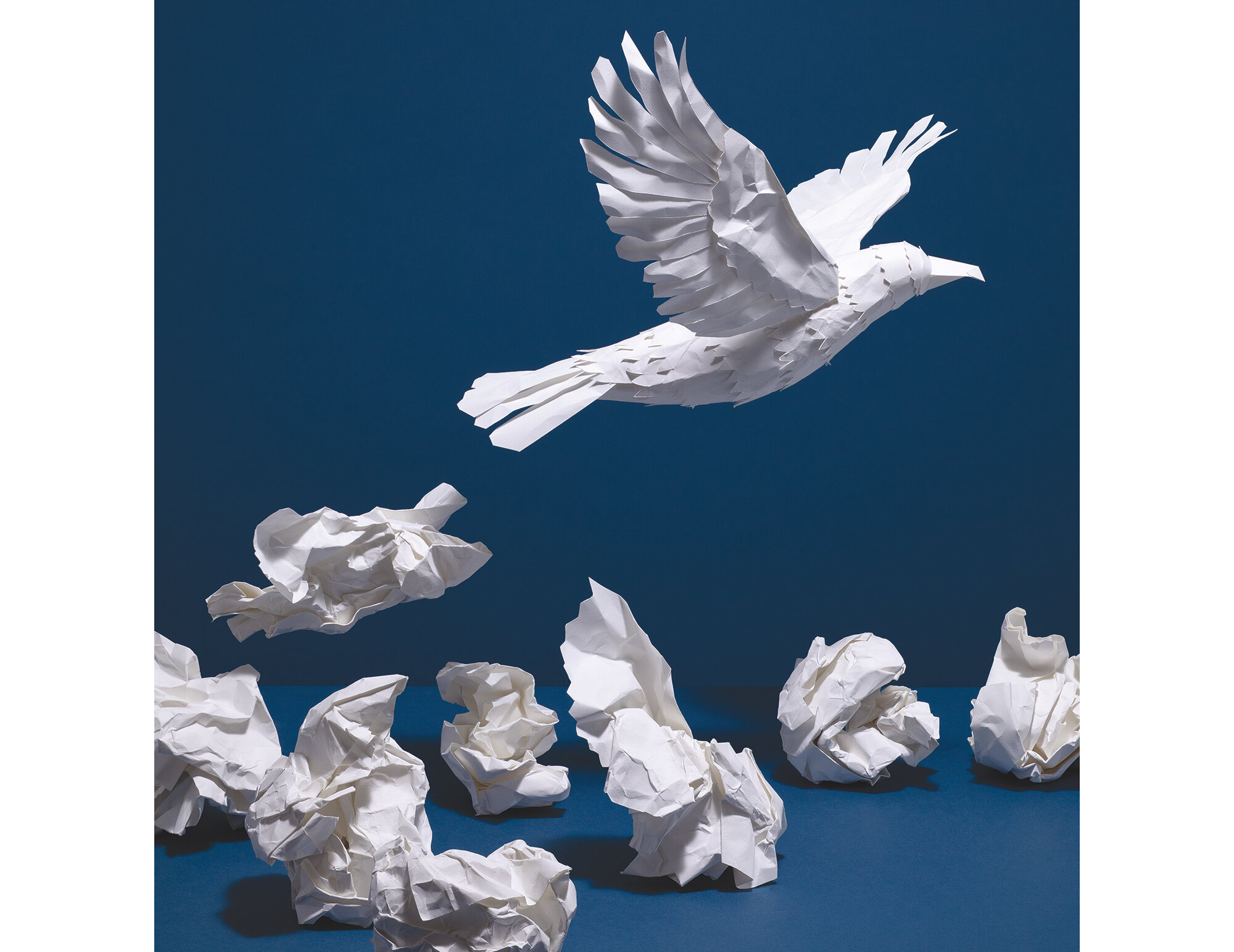

Illustration: Kyle Bean

Behind any scientific success story can be found a researcher who persevered through failure and views it as not only inevitable, but necessary.

by Andrew Moseman

The Hawaiian skies stayed clear over the Subaru Telescope on that first night in February, despite a gloomy forecast. The second night, too, offered an unobstructed view. And then, on night three, the telescope broke.

“We couldn’t open the dome,” says Konstantin Batygin (MS ’10, PhD ’12), Caltech professor of planetary science. “So, we wasted two, three hours just sitting there, watching Netflix and waiting until the telescope was fixed.” When Subaru finally got up and running, the fog rolled in.

For Batygin and Mike Brown, the Richard and Barbara Rosenberg Professor of Planetary Astronomy, a third-day glitch is a punch to the gut. The pair uses Subaru to hunt for Planet Nine, the large undiscovered world they have predicted to exist in the far reaches of the solar system based on the gravitational effects it seems to exert on other objects. Theirs is not a search that will culminate in the classic “aha!” moment when an astronomer spots the speck of a new world against the black backdrop of space. Instead, they must scan the sky for three consecutive nights, finding candidate objects on the first night, measuring their velocities on the second night, and measuring acceleration on the third. All three steps are required to know whether a distant object could fit the predicted parameters of Planet Nine. But sometimes, on the third night, the telescope just won’t open.

Many Ways to Fail

“It’s not that these problems aren’t solvable,” Bil Clemons (above) says. “But when you think about the complexities of a cell where you’ve got hundreds of millions of molecules and all of this information flow back and forth at many different scales, at any given moment to understand what’s going on in that cell is beyond our reach right now.” Photo: Lance Hayashida

Fieldwork ruined by uncooperative instruments or inclement weather is just one way that failure can strike a scientific endeavor. “There are more ways to fail than just failing to prove something you’re out to prove,” says Omer Tamuz, professor of economics and mathematics. Sometimes scientists bang their heads against the desk for months and nothing comes of it. Sometimes a mathematician proves a theorem, writes the paper, and only then realizes someone else already proved it 20 years ago. Sometimes a researcher “discovers” something and then sees the world greet the achievement with a shrug of deafening silence. “I’ve failed in that way also,” Tamuz laughs. “Some papers, I think they’re great, but I cannot get a decent journal to publish them because nobody else cares about this idea that I think is so magnificent.”

Failure lies around every corner of scientific life, notes biochemistry professor Bil Clemons. Papers and grant proposals are rejected. Tenure proves elusive. Promising projects go nowhere, while promising graduate students go elsewhere. All the while, problems bounce around in one’s brain for weeks or months with no solution in sight.

Yet there is another way to look at failure. To Clemons, it is inherent in Caltech’s signature brand of high-risk, high-reward science. Pursuing a bold new idea with transformative potential inherently opens one up to the possibility of failing, sometimes in spectacular fashion. Often the path to a breakthrough starts with a failure, he says, but only if we are willing to stare failure right in the face and learn from it. Batygin goes further, arguing there can be no great success without failure. A performing guitarist when he is not looking for Planet Nine, Batygin compares the practice of science to playing an instrument. “If you’re learning something new, you’re going to stink at it for the first hundred times you play a passage, and then it’s going to be OK. In science, I think it’s a similar thing. There’s a process of getting the wrong answer time after time, and then something happens and it all snaps into place.”

Success in Disguise

In the 1970s, a new rumble emanated from seismology. “It was just at the beginning of the time we were thinking that earthquake prediction might be possible, after having a long time of saying ‘No. There’s no way,’” says Lucy Jones, visiting associate in geophysics. Caltech researchers had issued an earthquake prediction, and Chinese scientists at the Institute of Geology and Geophysics of the State Seismological Bureau in Beijing appeared to have successfully predicted a quake. When China began to normalize relations with the United States, Jones, then a 23-year-old doctoral student who had happened to study Chinese as an undergraduate, traveled to Beijing to see the predictive research firsthand; she was among the first American scientists to work in the country.

The heady days did not last. Caltech’s prediction did not pan out, while Jones’s trip to China revealed its earthquake prediction to have been more of a lucky guess. “They didn’t have a better idea than we did of what made something look like a foreshock,” she says. (A foreshock is a smaller quake that precedes a larger one in the same location.) In time, it would become clear that earthquake prediction is not possible, but back then, Jones was convinced an answer could be found in the foreshocks. Surely some unique feature, like their pattern of relieving stress in the earth’s crust, would become a telltale sign to separate a harbinger of the Big One from the multitude of small earthquakes that occur every day. She gathered all the data she could find on known foreshocks to look for similarities or connections among them. The signature never showed up.

But something funny happened on the way to a failure: in the process of compiling foreshock data, Jones released what she calls “probably one of the simplest papers I’ve ever done.” It determined that about 6 percent of the time, a smaller earthquake precedes a larger earthquake in more or less the same spot. Now, when a quake occurs, such as the 6.4 that struck near Ridgecrest, California, in July 2019, Jones advises officials that there is about a one in 20 chance of a bigger shock to come. It happened in that Ridgecrest case, when a 7.1 quake occurred the next day. “Every earthquake warning issued in the state of California started in that work, which was just trying to set the baseline to go out and find the real things,” Jones says. “And we never found the real things. It turns out the baseline was really interesting.”

10,000 Wrongs to Make a Right

Photo: Lance Hayashida

Sometimes success comes disguised as a failure. But sometimes it simply means persevering through 10,000 failures to find a solution that works.

Aaron Ames, Bren Professor of Mechanical and Civil Engineering and Control and Dynamical Systems, builds bipedal robots: those that walk on two legs as humans do. The brain is so good at walking that most humans can do it unconsciously, but walking on two legs is actually a maddeningly complex process that boils down to falling and catching oneself with every step. “You have to land your foot in such a way that you catch yourself from falling in that moment,” Ames says, “but also propel yourself forward in the same motion.”

To make a working humanlike heel-toe gait, essentially a bipedal robot’s manner of walking that is guided by mathematics, Ames begins with around 10,000 candidate gaits. Many of those never make it out of mathematical simulations because it becomes clear the robot would topple. Perhaps a hundred gaits survive long enough to be tested on the hardware, albeit with human scientists holding the unsteady automaton upright. When the Ames Lab finds the best few gaits of the bunch, the iterative process starts again. Each cycle of refinement inches the research toward a two-legged robot that can not only walk tall on a treadmill but smoothly traverse any unexpected terrain it might encounter in the outside world.

“Scientists want to make it sound like it’s really fun and rosy and you get to discover things, and it’s true,” says Julia Greer, the Ruben F. and Donna Mettler Professor of Materials Science, Mechanics, and Medical Engineering. “It’s just all in the context of many, many, many failures. Whether it’s the failure of the material or the failure of you to perform the right experiment, it’s frustrating. It doesn’t work the first time, and very often it doesn’t work the second or the nth time. That one time when it does, you have to have enough perseverance and wisdom … and the right state of mind.”

To Err Is Human

A human brain is a hypothesis machine. To John O’Doherty, professor of psychology, it can act like a scientist: it builds and tests models that make predictions about the world, and when the model fails, or when data prove the prediction wrong, the brain adapts its worldview. “Our brain has all this machinery that enables us to learn from the environment and make good predictions about what’s going to happen next,” he says. The O’Doherty Lab investigates the neurobiological mechanisms the brain uses to make those predictions, whether they are deeply rooted assumptions that a rustling in the bushes might be from a predator or the kind of sophisticated, goal-oriented decisions that guide an enterprise like science itself. Either way, the ability to learn from failure is a fundamental part of how the brain works.

For scientists like Tamuz, however, it can be hard to be objective and dispassionate about one’s lifework. When setting out to prove a mathematical theorem, Tamuz says, he cannot help but root for one outcome over another. Although proving something is false is just as valid a discovery as proving it to be true, it can be so much less satisfying. “That can be very dangerous because you might ignore all sorts of signs that you’re wrong. That will make you waste a lot of time.”

Indeed, often the instrument that fails is not a telescope, seismometer, or mass spectrometer, but the human brain, says Rob Manning (BS ’82), chief engineer at JPL, which Caltech manages for NASA. Part of the reason lies in the limitations of our human “hardware.” Consider our visual system, he says. People think of their eyeballs as a pair of super-high-resolution cameras that create this full field of view we see. In fact, a “stunningly small” amount of the information our eyes gather makes it to the visual cortex, Manning says. The brain fills in the blanks.

In the same way, Manning says, a scientist cannot possibly consider all the data in the universe. The brain unavoidably filters information, and, in doing so, sometimes masks important clues that could be used to adjust our hypotheses or worldview. “We tend to be overconfident about what we think we know,” he says. Just as your brain builds vision based on small clues that the eyeball sends to the visual cortex, humans do the same thing with our reasoning skills. “The information we get tends to affirm, not negate, the models we simply have in our brains, which is a defense mechanism … and that’s a problem.”

“Don’t Drink Your Own Kool-Aid”

“The worst enemy of any scientific or research pursuit is isolating yourself,” Julia Greer (left) says. “Most of us really like having colleagues who scrutinize and challenge us because that’s kind of like a reality check. Instead of getting defensive about it, you have to treat it as an opportunity to go through research in detail and to make sure that it’s right.” Photo: Lance Hayashida

Down at the nanoscale, where things happen on the order of a billionth of a meter, materials are not themselves. Graphite, which in everyday life cracks under pressure (think of a broken pencil tip), deforms and acts like rubber under intense stresses at the nanoscale. Some metals, meanwhile, suddenly become much stronger.

Greer studies these super-small-scale oddities and how to use them to build larger-scale materials with new properties. In graduate school, her team built nanoscale pillars of gold and measured their strength while crushing them. They showed that, while soft and malleable in common uses like jewelry, gold acts like steel at the nanoscale. In fact, the smaller the pillars, the stronger they appeared to be. So Greer kept building taller and thinner towers until one showed a truly staggering result. “At that point,” she says, “we were so drunk on our success that we boasted, ‘Hey, we just made 11-gigapascal-strong gold. That’s as strong as diamond. Look at what we did.’”

Outside observers questioned this extraordinary result when Greer presented the data at a conference, but she dug in, having repeated the experiment with the same result. “I was young, and I was ready to fight,” she says, so the team published the work. Later, Greer says, she found the true explanation for the outlier: the nanoindenter, the piece of equipment that crushes the gold pillars, was tilted slightly so that it exerted some of its force not on the nanopillar but on the platform it sat upon. Greer had to publish a correction, known as an erratum. “Sometimes you can get so engrossed in your own excitement that it blinds you to the point where you kind of lose sight of what’s real and what isn’t,” she says.

That is especially true in the nascent fields of science Caltech researchers love to explore. Batygin puts it this way: don’t drink your own Kool-Aid. The hunt for Planet Nine is a high-stakes pursuit subject to much criticism and skepticism, including numerous studies that claim to disprove its existence. (It is not surprising, he says, if you take the acrimony over Pluto’s demotion from planethood— the result of Brown’s own findings—as evidence of how much emotion is invested in the structure of the solar system.) So Brown and Batygin try to keep each other honest and seek out the weaknesses in their work long before it appears in someone else’s claim that they have debunked the existence of Planet Nine. “We’re always trying to find something wrong.”

High Stakes, High Rewards

Integral membrane proteins (IMPs) are crucial cellular connectors embedded within the cell membrane that separate the inside of the cell from the outside. Many pharmaceutical drugs target specific IMPs because they act as a gateway. Yet the vast majority of IMPs remain uncharacterized because of the laborious trial and error required to do this work. Basically, structural biologists give an IMP’s DNA to bacteria and hope the bacteria will grow the protein. Eight times out of 10, that does not happen.

The Mars 2020 mission reached the Red Planet traveling at 12,500 miles per hour and decelerated to a standstill in just seven minutes, thanks in part to its impeccable parachute. Photo: NASA/JPL-Caltech

Clemons had a better idea: What if computer models could predict what would happen when bacteria receive those DNA segments? Such a process would do away with the time-intensive trial and error of the current method. He just had no idea whether it would work. (A couple of years into the process, he notes he still is not sure.) Clemons says his experience reflects a common dilemma. Chipping away using a current approach can be inefficient, but it works. It will create data and lead to published papers. Pursuing a new way to understand the problem, on the other hand, could lead to a leap forward or nothing at all. As a researcher in a publish-or-perish world, he says, one must balance projects that are likely to lead to tangible results with those that aim for something more profound.

The history of biology is replete with such risk-takers. Vaccines based on mRNA, like the Moderna and Pfizer COVID-19 vaccines, were born of one person’s insight that many other people dismissed. The same is true of the CRISPR gene-editing tool. And as Frances Arnold, the Linus Pauling Professor of Chemical Engineering, Bioengineering and Biochemistry, has noted, some people dismissed her work in directed evolution, which uses nature’s mechanisms to drive beneficial mutations and thereby create powerful new enzymes, and which won her the 2018 Nobel Prize in Chemistry.

“Frances Arnold is an example of somebody who was told that their ideas weren’t going to work,” Clemons says, “and that these were not problems that you could address and that she was doing it the wrong way. She basically said, ‘Look I’m going to do the hard work to build the foundation for this.’ It’s the kind of thing where you have to believe in the principle, be willing to take the risk.”

The Human Way

Manning says making space to fail is the modus operandi at JPL, which leads high-profile missions such as Mars 2020, which landed the Perseverance rover and, in April 2021, flew the Ingenuity helicopter. When something goes wrong on a space mission, it goes wrong explosively, publicly, and permanently. There is no mulligan for a Mars mission that crash-lands on the surface or misses the planet entirely, sending a few billion dollars of taxpayer money careening into the void, which may explain why Manning loves to talk about failure and vehemently objects to its vilification. “We have to create a venue for us to fail locally,” he says. “And so we have to create venues that allow us to discover the shortcomings in our design.”

Now that Mars 2020’s Perseverance rover and Ingenuity helicopter are safely on the Red Planet, Rob Manning (BS ’82; right) is part of a team thinking about a future mission that would bring samples of Mars back to Earth for the first time. Photo: NASA/JPL-Caltech

Failure, he says, is good, as long as scientists and engineers are willing to acknowledge it rather than sweep it under the rug because it spoils an intended result. (Ingenuity, for example, failed to take off on its fourth test flight in April 2021 because of a software glitch, one JPL fixed before its next test.) That is why a major part of JPL culture is the idea of encouraging colleagues to find the holes or weaknesses in a design. With the famously fraught landings of Mars rovers, JPL tests every crucial step countless times under simulated Martian conditions, slightly varying environmental or other factors to make sure the mission can overcome whatever it may encounter. What interests Manning the most from these numerous, varied simulations are the failures, because while 50,000 successes tell you nothing, one failure can be invaluably instructive. “We can go back to test-run 40,361, the one where everything went off the rails, and reproduce it,” he says.

One crucial caveat: it is impossible to test everything. Once, while Manning’s team sought to study the post-inflation dynamics of parachutes in the skies high over Hawaii, the chutes mysteriously exploded in test after test. After some frustration and head-scratching, the researchers realized that the model they had used to simulate Mars parachutes was flawed. It turns out that a chute inflates much faster in the thin atmosphere of Mars than JPL had realized, and therefore it endures more pressure when the chute snaps open. A computer simulation cannot catch a failure of imagination, Manning says, because the imperfect human brain cannot program what it does not know.

To Manning, the crucial point of such success stories is that they are made out of failure, and yet, he argues, we live in a world that grows increasingly intolerant of failure. Not everybody fails as spectacularly as a doomed Mars mission, but everyone in science has something at stake. Young people in academia feel the pressure to be “failure free” and to present perfect research, he says, never mind that good science is full of wrong turns and false starts.

“Everyone wants to write a paper that just shows nothing but the good things,” Manning says. “They don’t talk about the real road of how they got there, do they? The real road is they weren’t even trying to get that answer.”

There must be space in science to fail, Manning says. “People who get their hands dirty, who take risks, fail,” Manning says. “I don’t want to see the future of STEM being for people who are risk-avoiders because of their fear of failure. The truth of the matter is, we stumble our way in the dark, and there’s nothing wrong with that. That is the human way, and that’s the way it’s always been. We should celebrate it.”