New Points of View: Caltech Imaging Research Reveals the Unseen

With a wave of new and improved technologies, Caltech researchers continue to change the way we visualize the worlds both in and around us.

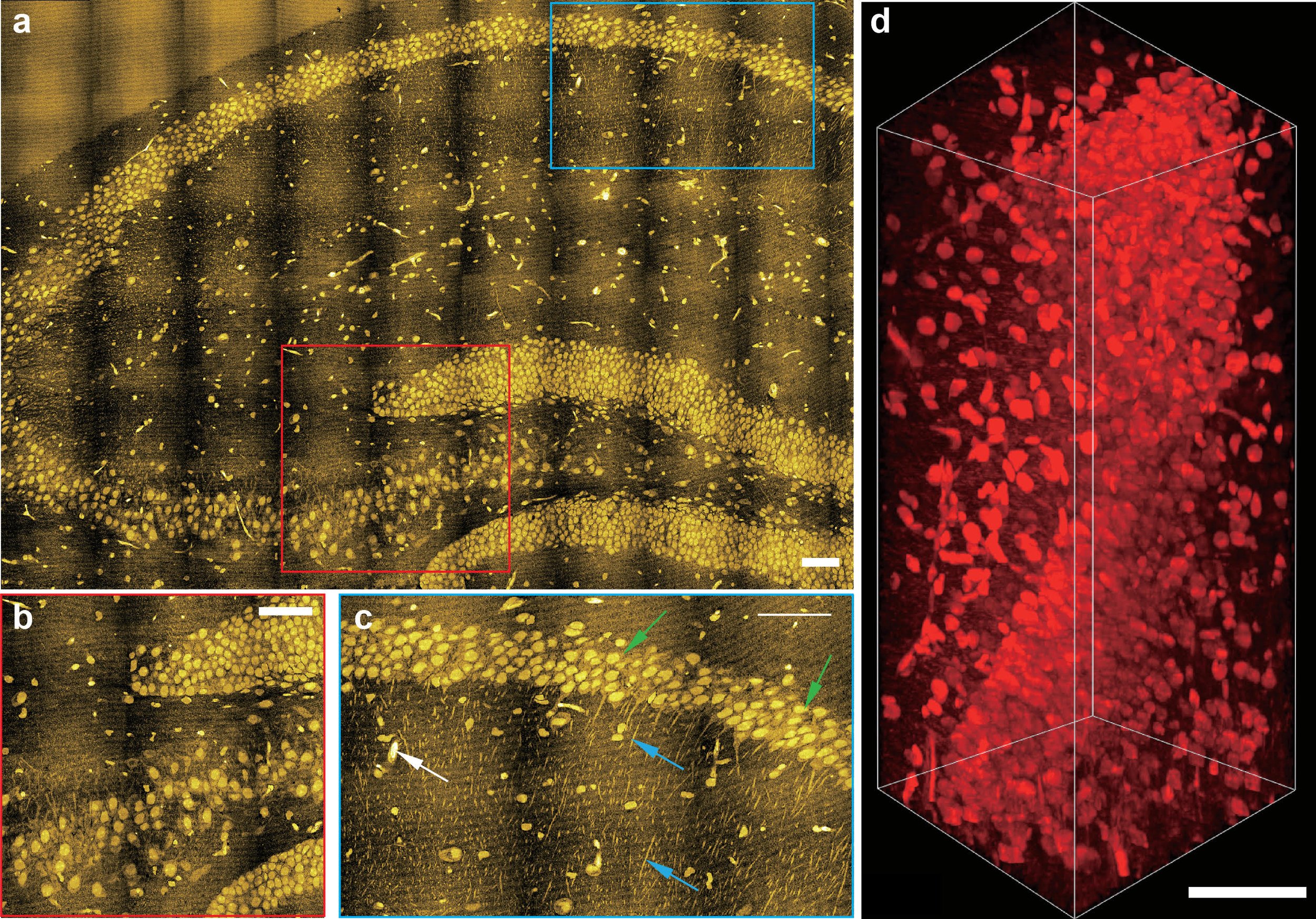

Cell imagery from the lab of Lu Wei. Super-resolution and volumetric tissue imaging by specialized microscopy can map chemical vibrations from proteins in the brain.

by Katie Neith

Chemist Lu Wei can remember the first time she truly saw the results of her research. The moment came when she was a graduate student and, with her lab mates, built a microscope. “When we got our first image, I literally jumped around,” Wei says. “Seeing really is believing.”

Wei has leaned into that sense of excitement ever since. Now in her own lab at Caltech, she uses new spectroscopy and microscopy methods to delve deep into biological tissues and track individual molecules in living cells. Wei is just one of many scientists and engineers at the Institute hard at work producing better pictures of objects as small as individual cells, as deadly as breast cancer tumors, and as far away as black holes. In an attempt to see deeper, farther, and faster, these researchers push the boundaries of traditional techniques by using new approaches, including some that incorporate artificial intelligence (AI), to identify patterns beyond the scope of the human eye.

Good Vibrations

To truly understand diseases and develop better ways to treat them, one needs to know what is happening in the body on a cellular level. Wei aims to do that through the design of innovative imaging techniques that exploit the unique vibrations of chemical bonds—which stretch and bend due to the constant motion of atoms—to visualize small biomolecules with high precision and resolution using the detected vibrations as coordinates. “For example, water molecules are made of O and H bonds that vibrate at a particular frequency,” Wei explains. “We can detect that vibration and map out where the water is in our cells and tissues and bodies.”

Wei’s team has built on this knowledge about how different chemical bonds vibrate and has developed nontoxic chemical tags that give off particular vibrations. These tags can be introduced into molecules to help researchers track them in the complex environments of living cells. Thanks to a specialized type of microscopy developed by Wei that can pick up the subtle vibrations, she has been able to probe the metabolic processes—or life-sustaining chemical reactions—inside different types of cells.

“With cancer and other diseases, we’re trying to find new or additional targets associated with metabolism that could help improve the efficiency of treatments,” Wei says. “Previously, we used this approach to pinpoint a couple of metabolic susceptibilities in melanoma cancer cells at the stem cell level. What was very impressive was that we were able to identify a process that directly linked to a very aggressive type of cancer cell.”

Now, she uses the same technologies and processes to explore metabolic regulation in cardiovascular disease and in brain tissue. “Because we’re in chemistry, we like to understand the fundamental aspects of exactly how something is being controlled,” Wei says. That kind of deep dive into how systems are regulated could also be used in efforts to make lithium-ion batteries safer. Following up on a project Wei initiated in 2018, the team plans to track the chemical dynamics of electrolyte distributions in batteries during charge cycles to figure out how to keep the batteries cooler. This could help address current safety issues that include fires, which are often caused by conditions related to electrolyte imbalances.

“In addition to biology, which is still my main interest, the instruments and techniques we’re developing have potential applications for other fields like renewable energy and materials science that I plan to explore further,” she says.

Seeing with Sound

Chemical engineer Mikhail Shapiro also wants to track functions cell by cell in the body to develop better health diagnostics and therapies: no easy feat, given that the human body holds some 37 trillion cells. But he uses a different kind of vibration—sound waves, rather than vibrations caused by motion—to image activities deep within a cell’s natural habitat.

To do this, Shapiro has pioneered a technique that uses genetic engineering to make genes dubbed “acoustic reporters” that produce air-filled proteins called gas vesicles when inserted into a cell. These vesicles, or “acoustic proteins,” contain pockets of air that can reflect sound waves, which allows them to be located and tracked using one of the most widely used imaging techniques in the world: ultrasound.

An artist’s representation shows gas vesicles inside a bacterium.

“The challenge has been that, historically, ultrasound has showed us anatomy, like where bones and muscles are, but it couldn’t show us specific cells,” Shapiro says. “Now, not only can we see where cells are located, but we can also look at their function because we can program them to only make the gas vesicles under certain conditions. This has opened up new potential for deep-tissue cellular imaging that was not previously possible.” Shapiro and his team can also amp up the ultrasound waves to a strength that can pop the gas vesicles instead of just pinging them; this results in a stronger signal that allows researchers to see much smaller quantities of the vesicles. This increased sensitivity has the potential to improve studies of the gut microbiome, where a large portion of immune cells live, by being able to home in on just a few cells in a sea of many. “One of our near-term ambitions is to visualize immune cells as they go around the body and seek out and attack pathogens or tumors,” Shapiro says. “We want to watch this drama unfolding in real time.”

As someone who studied neuroscience as an undergraduate, Shapiro is also heavily invested in learning about the brain. In fact, a lack of effective noninvasive technologies with which to study neurons is what drove Shapiro to pursue a PhD in biological engineering. Last year, he received grants for two neuroscience studies from the National Institutes of Health’s Brain Research Through Advancing Innovative Neurotechnologies (BRAIN) Initiative. One of these BRAIN-funded projects aims to image neural activity using ultrasound on a brain-wide scale as a means to help understand and develop better treatments for neurological disease. The other study is focused on brain-computer interfaces, and it involves the use of ultrasound to record brain signals in a less invasive way than current implants. The information gained from this study could, for example, be used to help paralyzed patients learn to perform various tasks with neuroprosthetics.

Ultimately, Shapiro hopes the technologies he develops will become a model for various types of research, with biology labs across the world placing ultrasound machines next to their optical microscopes. “In addition, I will be happy if physicians start using ultrasound to look at where their cell and gene therapies go inside the body and what they’re doing, so they can take corrective actions if the therapy is not doing what they want,” he says. “I hope that our acoustic proteins will make it possible for many more laboratories to see previously invisible things inside living beings.”

Laser Focus

In 2014, Caltech engineer Lihong Wang announced that he had succeeded in his quest to build the world’s fastest camera, the first one capable of capturing a light pulse, or laser beam, as it moves. Since then, he has improved upon his technology and built cameras that can see light scatter in slow motion, observe seemingly transparent objects, and produce 3-D videos. “We have to understand light before we fully understand the world and fully understand nature,” Wang says. “Light has the ultimate speed limit if Einstein is still correct. With our camera, for the first time, we can actually see a light pulse at light speed.”

Last year, in the January 13 issue of Science Advances, Wang reported on progress in his team’s study of chaotic systems with his compressed ultrafast photography (CUP) camera, capable of speeds as fast as 70 trillion frames per second. Chaotic systems, such as air turbulence and certain weather conditions, are notable for exhibiting behavior that is predictable at first but grows increasingly random with time. Their experiment observed laser light—which moves at extremely high speeds—scatter in a chamber designed to induce chaotic reflections. Figuring out how light moves under chaotic conditions has applications in physics, communications, cryptography, and flight navigation.

“You can argue that we’re extending what our eye can see far beyond the skin. We’re showing surgeons what they would see after cutting open a body without having to do so.”

In addition, with a few modifications, Wang has used his ultrafast camera to capture signals traveling through nerve cells for the first time, a feat reported in the September 6 issue of Nature Communications.

Like Shapiro, Wang also creates medical imaging techniques that utilize ultrasound but work in combination with lasers. He has invented a number of photoacoustic imaging techniques that combine light and sound waves for deep, noninvasive views of biological tissue without the risk of radiation. For example, his laser-sonic scanner for detecting breast cancer tumors is currently being developed for use in health care facilities. It can pinpoint tumors in 15 seconds without the discomfort or radiation of mammograms, the current gold standard in breast cancer screening.

“We use a safe dose of laser light with the right color that can actually penetrate quite deeply into biological tissue, but the light won’t go straight, like X-ray does; it will just wander around,” Wang explains. “That’s why we resort to photoacoustics. When molecules like blood hemoglobins absorb light, they will start to vibrate, and that vibration is a sound source. We capture that sound signal, and then we can pinpoint where that signal is from and form an image.”

He compares the process to how lightning and thunder work: the lightning is the laser pulse and the thunder is the sound you expect to hear some seconds later. In the same way that you can triangulate the location of a storm using the time between these weather phenomena, Wang and his collaborators can construct an image inside the body.

An in vivo photoacoustic tomography image, captured by Lihong Wang’s lab, of a human breast.

“You can argue that we’re extending what our eye can see far beyond the skin,” says Wang, who recently used his photoacoustic imaging techniques to look inside the brain and detect minute changes in blood concentration and oxygenation. “We’re showing surgeons what they would see after cutting open a body without having to do so.”

New Angles

While Wang works to see through objects, Changhuei Yang tries to also see around them. In May 2022, he and members of his lab reported in Nature Photonics on a technique that can detect an object of interest outside a viewer’s line of sight. The imaging method operates by using wavefront shaping, in which light is banked off a wall to generate a focused point of light to scan the object, allowing researchers to see what is out of sight.

“This technology might have use in the future for self-driving cars, as well as for spacecraft traversing around a planet where there might be, say, hidden caverns that they want to explore,” Yang says. “Something like this would allow us to do non-line-of-sight imaging and interrogation of an environment in a unique way.”

But peering around corners isn’t the only way to find hidden objects and patterns. The bulk of Yang’s work in- volves the development of better microscopes, through the use of sensors and computational methods, to see more deeply into biological tissues than ever before and extract information from those samples. He has also begun to use deep learning, a type of AI, to detect patterns in biological imaging that a human observer would not be able to spot.

“There are things that are likely predictive of diseases that human eyes simply cannot pick up on because our ability to recognize patterns is limited,” Yang says.

In essence, Yang wants to make machines that can be taught to see better than us, and he has made significant progress. In collaboration with Magdalena Zernicka-Goetz, Bren Professor of Biology and Biological Engineering, Yang has developed a way to use machine learning algorithms to detect subtle pattern differences in images of embryos during the in vitro fertilization (IVF) process that could indicate whether they are healthy and will result in a successful pregnancy or not.

Together with pathologists from Washington University in St. Louis, Yang and his team recently sought to verify a hypothesis many oncologists believed to be true: that if cancer cells are well encapsulated by connective tissues, they will not spread to other parts of the body. Instead, a machine learning analysis of tumor sample images for which the outcome was known indicated the opposite: when the encapsulation is leaky, metastasis risk appears to be lower. A possible explanation for why this may be true is that white blood cells are able to enter and keep the cancer cells in check.

“This whole area of building instruments and algorithms is very rich in terms of the opportunities for actually coming up with new innovations,” says Yang, who recently launched a new project aimed at making a camera to image root-soil interactions underground to learn more about the effects of climate change on crops and vegetation. “And being able to make an impact in a meaningful way is really fulfilling. Knowing that, one day, what we’re doing is going to maybe have a profound impact on pathology, for example, or IVF procedures, is something that I think drives not just me but the rest of my group as well.”

Cameras That Compute

Computer scientist Katie Bouman also uses AI to help compile images that would otherwise be impossible to create. But while Yang and his colleagues focus, both literally and figuratively, on microscopic cells and molecules, Bouman typically sets her sights on much bigger objects, like black holes, and she builds instruments that reimagine the role and function of cameras themselves in order to do it.

“I like to say that the size is equivalent to the size of a grain of sand, if that grain of sand is in New York and I’m viewing it from Los Angeles.”

“For hundreds of years, cameras have been modeled off of how our eyes work, but that can only get you so far,” Bouman says. “We’re exploring what happens if you allow yourself to break the standard model for what a camera should look like. By solving for novel computational cameras that merge new kinds of hardware with software, the hope is that the synergy between them will allow you to recover images or see phenomena that are not possible to see using traditional approaches.”

Katie Bouman with the Wall Street Journal cover announcing the team’s black hole image.

Bouman first became interested in computational cameras as a graduate student at MIT, where she worked on the Event Horizon Telescope (EHT) project as a member of the team that produced the first image of a black hole in 2019. Since joining Caltech that same year, she has continued this work and led a Caltech-based team of key contributors to the EHT Collaboration’s most recent achievement: generating the first image of the super- massive black hole at the center of the Milky Way galaxy. “The big challenge of imaging any black hole is that they’re so far away and so compact that they’re really, really small in the sky,” Bouman says. “I like to say that the size is equivalent to the size of a grain of sand, if that grain of sand is in New York and I’m viewing it from Los Angeles.”

To take an actual picture of something so minuscule, she says, would require a telescope the size of Earth. Instead, the team took images from telescopes around the world to form one single image with the help of algorithms to piece together the blank spots. “If we only collect light at very few points around the world, we have to fill in the missing information,” Bouman explains. “And we must fill it in intelligently. My main goal was to take the data that we collect and to recover the underlying picture. It’s not like a normal camera where you collect all the information and you can see it with your eyes.

You have to make sure that you’ve captured the range of possible images that could explain the data.”

A simple version of this kind of complex computational camera exists in smartphones. When you take a photograph using the high dynamic range (HDR) function, it actually produces numerous photos taken at different shutter speeds. The camera then employs an algorithm to pull out pieces of data from each of those images to create a composite of all the best parts. Similarly, the cameras that Bouman and her research group design are combining sensors and AI to achieve images, on many different scales, of objects and phenomena never seen before.

Like most imaging technologies, computational cameras have medical applications too. With machine learning expert Yisong Yue, professor of computing and mathematical sciences and co-director of Caltech’s AI4Science initiative, Bouman has worked to both speed up and improve MRI machines through the development of algorithms that help the machine adjust the images it takes in real time. (Currently, MRIs must rely on predetermined sample locations.) “Our approach allows for decisions to be made as the patient is being scanned to try to get the most informative measurements in the shortest amount of time,” Bouman says. (Tianwei Yin, Zihui Wu, He Sun, Adrian V. Dalca, and Yisong Yue collaborated on this work.)

While she expects to continue her pursuit to improve astronomical imaging, Bouman says she is interested in applying her computational cameras to fields beyond those she has already explored, such as seismology and robotics. “The small size of Caltech allows me to col- laborate so much more easily across disciplines,” she says. “There are so many potential applications around campus; the challenge now is how to choose among them, because you can’t do everything.”

Katie Bouman is an assistant professor of computing and mathematical sciences and electrical engineering and astronomy, a Rosenberg Scholar, and a Heritage Medical Research Institute Investigator. She is supported by a National Science Foundation CAREER Award, the NSF Next-Generation Event Horizon Telescope Design grant, an Okawa Foundation Research Grant, a Heritage Medical Research Fellowship Award, a Schmidt Futures award, and Caltech’s Sensing2Intelligence (S2I) initiative and Clinard Innovation Fund.

Mikhail G. Shapiro is a professor of chemical engineering, a Howard Hughes Medical Research Institute Investigator, and an affiliated faculty member of the Tianqiao and Chrissy Chen Institute for Neuroscience at Caltech. His research is supported by the National Institutes of Health (NIH), the U.S. Army, the Chan Zuckerberg Initiative, the David and Lucile Packard Foundation, and the Pew Charitable Trusts, as well as Caltech’s Jacobs Institute, Donna and Benjamin M. Rosen Bioengineering Center, and Center for Environmental Microbial Interactions.

Lihong Wang is the Bren Professor of Medical Engineering and Electrical Engineering, the Andrew and Peggy Cherng Medical Engineering

Leadership Chair, and executive officer for medical engineering. His work is funded by the NIH.

Lu Wei is an assistant professor of chemistry and a Heritage Medical Research Institute Investigator. Her work is funded by the NIH, the Alfred P. Sloan Foundation, and the Shurl and Kay Curci Foundation.

Changhuei Yang is the Thomas G. Myers Professor of Electrical Engineering, Bioengineering, and Medical Engineering and a Heritage Medical Research Institute Investigator. Funding from the NIH, Amgen, and Caltech’s Donna and Benjamin M. Rosen Bioengineering Center supports his work.