"The Universe Has Spoken"

“Ladies and gentlemen, we have detected gravitational waves.” With those words at a press conference on February 11, 2016, in Washington, D.C., David Reitze, executive director of the Laser Interferometer Gravitational-wave Observatory confirmed rumors that had been circulating for months: LIGO had succeeded in detecting gravitational waves, ripples in the fabric of spacetime, here on Earth.

It was a huge moment for LIGO— and for science. The detection confirmed an important prediction of Albert Einstein’s 1915 general theory of relativity—namely, that massive bodies can curve space and time, and their acceleration or deceleration produces gravitational waves that propagate throughout the universe.

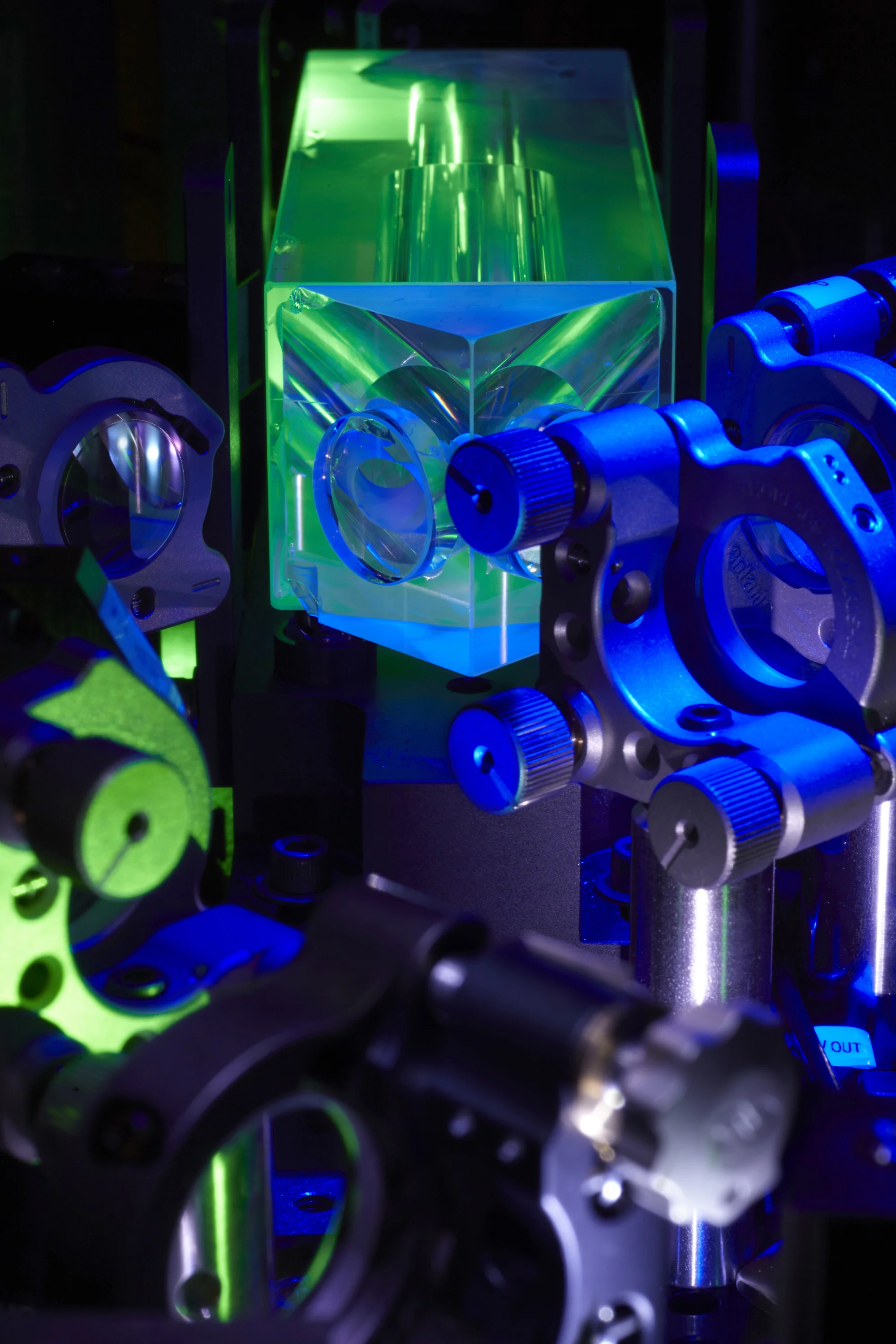

LIGO senior scientist Koji Arai explains the trends showing the variations in noise over a period of eight hours at Caltech’s 40-meter prototype interferometer. The change from quiet to noisy in the bottom panel is due to ground motion caused by local Pasadena traffic. The lack of variation in the middle panel shows that the vibration isolation systems are doing their job.

It was also a turning point for scientists who had been involved in another type of relativity: numerical relativity, a field in which physicists use supercomputers to solve massive equations that are far too difficult for humans—and even regular computers— to solve, in part because they involve warped spacetime. The solutions allow relativists to simulate events like the binary black hole merger that produced the particular gravitational waves LIGO had detected in an attempt to figure out how the signals should look.

At the press conference during which LIGO researchers unveiled the details of the detection—that on September 14, 2015, a signal picked up, independently and about 7 milliseconds apart, at LIGO’s twin interferometers in Livingston, Louisiana, and Hanford, Washington—Caltech’s Kip Thorne, one of LIGO’s founders, described the event that produced the observed waves as a violent storm in the fabric of spacetime. “We have been able to deduce the full details of the storm,” he explained, “by comparing the gravitational waveforms that LIGO saw with waveforms that are predicted by supercomputers.”

Those waveforms matched—with near-perfect precision—what the computers predicted from the merger of two black holes. Indeed, thanks to the numerical relativists and their simulations—and to the scientists who’d used those simulations to build approximate waveform models—the LIGO scientists were able to determine that one of the colliding black holes that produced their gravitational waves had a mass of 29 solar masses while the other had a mass of 36 solar masses. They were also able to deduce that the signal was from the final fractions of a second, 1.3 billion years ago, when the inspiraling black holes collided, and that they were traveling at nearly half the speed of light when they coalesced.

A Bet and a Goad

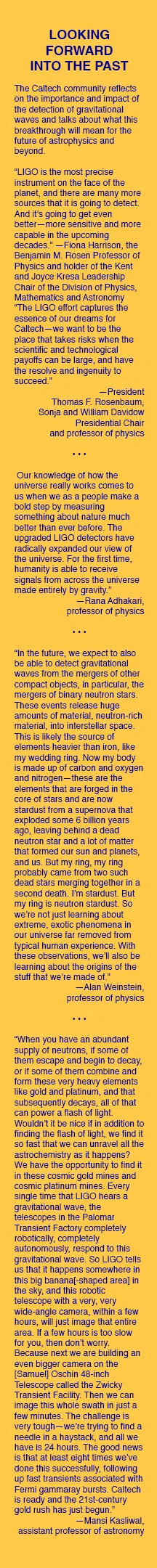

Caltech’s 40-meter prototype interferometer has been the testing ground for many theories and subsequent instruments and applications used at LIGO. Seen above are some high stability mounts for super-polished mirrors and lenses for the prototype. The glass object is a fused silica optical ring resonator, which removes the angular jittering of the laser beam, smooths out the variations in power, and makes it possible for the laser to be used in the large interferometers.

That the numerical relativists would have been able to create a simulation with that much precise and detailed information was anything but clear just two decades earlier. In fact, it was an outcome that Kip Thorne was willing to bet against.

In 1995, Thorne was chairing an advisory committee to the Binary Black Hole Grand Challenge Alliance, a group formed by the National Science Foundation to encourage numerical relativists to try to simulate merging black holes. Thorne, a theoretical physicist, bet the Grand Challenge’s researchers that they would not be able to successfully model such a merger before LIGO had detected its first gravitational-wave signal.

“I was trying to goad them into working harder, being more effective,” says Thorne. In truth, he was quite invested in their success. Along with LIGO cofounders Rainer Weiss of MIT and Ronald Drever of Caltech—as well as many other collaborators—Thorne had labored through decades of theoretical work and instrument prototyping to finally witness the construction of the facilities that would house LIGO’s interferometers in Livingston and Hanford. But without simulations, recognizing a gravitational wave—if and when one zipped by—was going to be nearly impossible. The researchers would not only have little idea what such a wave should look like but they would be unable to distinguish a signal produced by a binary black hole merger from one produced by a neutron star and a black hole smashing together, or two neutron stars. And they certainly wouldn’t know how to look more deeply at and learn more about these individual gravitational-wave sources. In other words, simulations—and the numerical relativists who produce them— were going to be key to the whole endeavor.

Relative Successes

Thorne, Weiss, Drever, and others had been developing a vision for the kinds of science that gravitational-wave observation would enable since 1968. In addition, scientists had been working with prototype interferometers not only at Caltech but also at MIT and in Glasgow, Scotland, and Garching, Germany, for more than 15 years, trying to figure out how to build the mirrors, lasers, vacuum chambers, and isolation systems that would make the instrument capable of capturing these elusive waves. LIGO is based on a fairly simple concept: the idea that laser light fired down two identical 4-kilometer “arms”—situated in an L shape—toward identical mirrors should bounce back to their point of origin at the exact same time. Any difference in the two arrival times could be caused by gravitational waves compressing spacetime a tiny bit along one length while stretching it a similar amount along the other.

Although straightforward in outline, making this concept a reality has required pushing multiple technologies to new levels of performance for decades. After all, the “tiny bit” of change that those instruments are trying to detect is an alteration in distance of about 10-18 meter, or onethousandth the diameter of a proton.

By the mid-1990s, it seemed possible that this seemingly impossible task might actually be doable in the near term. But in order to interpret the data, the scientists would need simulations of a kind rarely seen or accomplished before—a full solution of Einstein’s 10 field equations for the highly dynamical systems that are expected to produce gravitational waves. These solutions would need to include not just the gravitational waveform for the waves traveling in any direction but also the detailed, rapidly changing shape and size of the waves’ source and its curvature of spacetime at every point.

Of course, Einstein’s equations are not easy to solve. Each of these differential equations includes hundreds or even thousands of terms that must be solved for every moment in time and space. Just keeping track of all the numbers is a challenge, not to mention figuring out how to deal with the bizarre properties of black holes and strongly warped spacetime. And in the mid-1990s, attempts to solve these equations for black holes became unstable for reasons unknown at the time.

All of which is to say that, in 1995, the numerical relativists in the Binary Black Hole Grand Challenge Alliance had little confidence that they would win the bet with Thorne. Numerical relativists across the globe had been trying to run binary black hole simulations for decades, after all, and hadn’t even been able to compute a single black hole in full 3-D without the code crashing, much less a binary, says Mark Scheel, a research professor at Caltech who leads the numerical relativity efforts on campus.

“We were really worried,” says Scheel, who, at the time of the bet, was a graduate student at Cornell. “We had no idea what we were doing wrong.”

Eventually, Thorne became so concerned about the state of numerical relativity that he decided to form a group dedicated to it at Caltech. In 2001, he left the theory end of LIGO in the able hands of other physicists, and met with Saul Teukolsky (PhD ’74), a former student of his who was running what Thorne regarded as the top numerical relativity group in the nation at Cornell University. (“Hooking yourself up with the best is a great way to get started,” Thorne notes.

Thorne and Teukolsky formed a collaboration—now called the Simulating eXtreme Spacetimes (SXS) project—which they later expanded to include partners at the University of Toronto, Cal State Fullerton, the Albert Einstein Institute in Germany, Oberlin College, and Washington State University. With seed funding from the chair of Caltech’s Division of Physics, Mathematics and Astronomy at the time, Thomas Tombrello, and from then-provost Steven Koonin (BS ’72), followed by funds from the National Science Foundation and crucial gifts from the Sherman Fairchild Foundation and Michael Scott (BS ’65), the first CEO of Apple, Thorne established Caltech’s numerical relativity group and invited Scheel and Lee Lindblom, a Caltech colleague in theoretical physics, to help lead it.

“LIGO and the numerical relativity group were bets that Caltech made,” says Thorne. “Caltech’s willingness to invest resources in new science even when no one knows whether it is going to succeed is very impressive.”

The new group hit the ground running. Within a few years, the Caltech numerical relativists and their collaborators had done a lot of work to help those in the field understand their common stumbling blocks, including the realization that the standard way of writing down Einstein’s equations was not the right way to feed them into a computer. A reorganization of the equations into a new form enabled the computer to solve them without crashing. Selecting the coordinates to use for whichever type of system they were simulating was also problematic. In some cases, what seemed to look like a gravitational wave in a simulation was in fact the coordinates themselves wiggling back and forth due to the oddities of warped spacetime; in others, two points labeled with different coordinates eventually came to represent the same point.

And then there were singularities. These are regions near the centers of black holes where spacetime has collapsed, creating an infinite amount of gravity and an infinite amount of curvature. Perhaps unsurprisingly, computers (as well as most human brains) don’t deal well with infinities.

Then, in 2005, the field quite suddenly pushed past the bottleneck when physicist Frans Pretorius, then a postdoctoral scholar at Caltech, figured out a way of writing Einstein’s equations so that unacceptable errors were kept in check. He also incorporated into his simulation the idea that since nothing can escape a black hole, it is OK to essentially cut out its interior—a method known as excision—thus eliminating the problem of singularities.

Using these and other techniques, Pretorius managed to simulate the merger of two black holes using a supercomputer, the first such simulation ever achieved. About six months later, groups at NASA’s Goddard Spaceflight Center and at the University of Texas at Brownsville—using a completely different approach—produced another successful simulation of a binary merger.

And just like that, Thorne had lost his bet. He says he couldn’t have been more pleased. “The bet was fun, and it was a way to focus the community’s attention on some issues that I think are really important,” he says.

Ready for Detection

After those initial successes, the numerical relativists, struggling for an additional decade, gradually succeeded in simulating all sorts of black hole scenarios: identical black holes that did not spin, or that did spin, or that had different masses and the same or different spins; black holes whose spins caused space to whirl, dragging the plane of the holes’ orbit into precession— which in turn caused the holes’ spins themselves to precess, like rotating toy tops. The relativists also pushed upward the number of orbits they could follow. The longest published simulation, reported in 2015 by the SXS Collaboration, followed a black hole binary through 176 orbits, climaxing in a collision and merger.

These breakthroughs have given the LIGO scientists the simulations they need to be able to identify gravitational waves when they see—or hear—them, but the relativists’ work is far from done, says Scheel. For one thing, he says, they need to figure out a way to seamlessly connect their simulations of the late epoch of a binary’s evolution, when its bodies are orbiting close together, with analyses others have done of the earlier epoch when the bodies are farther apart—analyses that use an entirely different set of analytical tools.

Indeed, Scheel says, it’s fortunate that the first LIGO event turned out to be a high-mass binary black hole system, for which LIGO’s noise prevented it from seeing the earlier epoch. “That binary is something that we can simulate very well now,” he notes. Currently the Caltech team is working on speeding up its computer code so the hundreds of simulations needed for analyzing each LIGO event can be carried out quickly. The team also is developing a so-called surrogate model that will enable them to interface their simulations’ numerical waveforms with the LIGO team’s data analysis far more efficiently than is now possible.

When the LIGO team announced the first detection, it also essentially unveiled a new way to study the universe. These waves, after all, are capable of providing information about some of the most mysterious features of the cosmos, including black holes and the Big Bang. As Reitze said, “It’s the first time the universe has spoken to us through gravitational waves. Up until now we’ve been deaf to gravitational waves, but today we are able to hear them.”

Thorne emphasized that, even after 40 years of work, the announcement marked a beginning, not an end. “With this discovery, we humans are embarking on a marvelous new quest: the quest to explore the warped side of the universe,” he said.

In the next 15 to 20 years, we humans will be helped along in that exploration by next-generation gravitational-wave windows on the universe: Advanced LIGO, which looks at gravitational waves that oscillate with periods of milliseconds, and three additional types of detectors that will be looking at gravitational waves that oscillate with longer periods. Just as the introduction of radio, infrared, and X-ray telescopes have each delivered surprises and new insights about the cosmos, scientists believe the new views provided by gravitational-wave detectors will fundamentally alter our understanding of the universe.

“The future of gravitational-wave astronomy is very bright and very long term,” says Thorne, now the Richard P. Feynman Professor of Theoretical Physics. “I, personally, am looking forward to the day that, in large measure through gravitational-wave observation, we come to understand the birth of the universe. That, for me, is the biggest goal of this field . . . that, and discovering things that are totally unexpected.”